Discover how Myra combines digital sovereignty and cyber resilience.

Home>

Web scraping

02

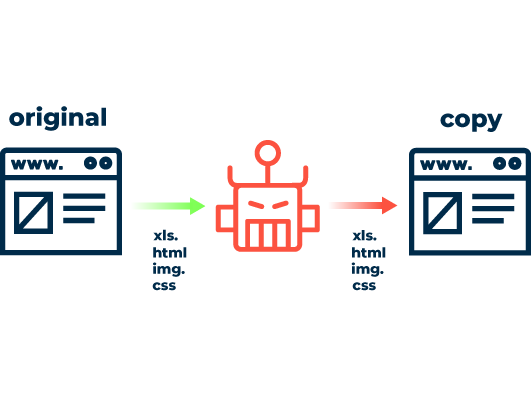

How does web scraping work?

A variety of technologies and tools are employed in web scraping:

Manual scraping

It is a fact that both content and parts of the source code of websites are sometimes copied by hand. Criminals on the internet resort to this method particularly when bots and other scraping programs are blocked by the robots.txt file.

Software-Tools

Web scraping tools such as Scraper API, ScrapeSimple and Octoparse enable the creation of web scrapers even by those with little to no programming knowledge. Developers also use these tools as the basis for developing their own scraping solutions.

Text pattern matching

Automated matching and extraction of information from web pages can also be accomplished by using commands in programming languages such as Perl or Python.

HTTP manipulation

Content can be copied from static or dynamic websites using HTTP requests.

Data mining

Data mining can also be used for web scraping. To do this, web developers rely on an analysis of the templates and scripts in which website content is embedded. They identify the content they are looking for and use a “wrapper” to display it on their own site.

HTML parser

HTML parsers familiar from browsers are used in web scraping to extract and parse the content sought after.

Copying microformats

Microformats are a frequently used component of websites. They may contain metadata or semantic annotations. Extracting this data enables conclusions to be drawn about the location of special data snippets.

03

Use and areas of application

Web scraping is employed in many different areas. It is always used for data extraction, often for completely legitimate purposes, but misuse is also common practice.

Search engine web crawlers

Indexing websites is the basis for how search engines like Google and Bing work. Only by using web crawlers, which analyze and index URLs, is it possible to sort and present search results.

Web crawlers are “bots”, which are automated programs that perform defined and repetitive tasks.

Replacement for web services

Screen scrapers can be used as a replacement for web services. This is of particular interest to companies that want to provide their customers with specific analytical data on a website. However, using a web service for this involves high costs. For this reason, screen scrapers, which extract the data, are the more cost-effective option.

Remixing

Remixing or mashup combines content from different web services. The result is a new service. Remixing is often done via interfaces, but if no such APIs are available, the screen scraping technique is also used here.

Misuse

The misuse of web scraping or web harvesting can have a variety of objectives:

Price grabbing: One special form of web scraping is called price grabbing. Here, retailers use bots to extract a competitor’s product prices in order to intentionally undercut them and acquire customers. Thanks to the great transparency of prices on the internet, customers quickly end up going to the next-cheapest retailer, which results in greater price pressure.

Content/product grabbing: Instead of prices or price structures, in content grabbing bots target the content of the website. Attackers copy intricately designed product pages in online shops true to the original and use the expensively created content for their own e-commerce portals. Online marketplaces, job exchanges and classified ads are also popular targets of content grabbing.

Increased loading times: Web scraping wastes valuable server capacities: Large numbers of bots constantly update product pages while searching for new pricing information. This results in slower loading times for human users—especially during peak periods. If it takes too long to load the desired web content, customers quickly turn to the competition.

Phishing: Cyber criminals use web scraping to grab email addresses published on the internet and use them for phishing. In addition, criminals can recreate the original site for phishing activities by making a deceptively realistic copy of the original site.

06

Legal framework: is screen scraping legal?

Many forms of web scraping are covered by law. This applies, for example, to online portals that compare the prices of different retailers. A ruling to this effect by the German Federal Court of Justice in 2014 clarifies this: As long as no technical protection to prevent screen scraping is overcome, there is no anti-competitive obstacle.

However, web scraping becomes a problem when it infringes copyright law. Anyone who integrates copyrightable material into a website without citing the source is therefore acting unlawfully.

In addition, if web scraping is misused, for phishing for example, the scraping itself may not be unlawful, but the activities that follow may be.